You’ve probably come across the term “crawl budget” in SEO articles and blog posts. It may sound technical, possibly even like a hidden issue on your website that prevents it from appearing in search results.

Here’s the thing. For most small sites, crawl budget doesn’t require constant attention. Google is generally good at crawling and indexing well-structured, compact websites.

That said, as your site grows, or if you’re working with a platform that generates many duplicate URLs or filtered pages, crawl budget can become a significant concern. Even on smaller sites, poor architecture and technical SEO issues can waste crawl resources and slow down the indexing of essential pages, often due to sending the wrong signals to Google in the first place.

In this article, we’ll explain what crawl budget really means, how it works behind the scenes, and how to keep your site clean and crawl-friendly. We’ll also inform you when it’s crucial and how to recognize warning signs before they affect performance.

What Is Crawl Budget, Really?

The crawl budget is the number of pages that Googlebot crawls on your website within a specified period. That time frame isn’t always visible to you, but it happens continuously in the background.

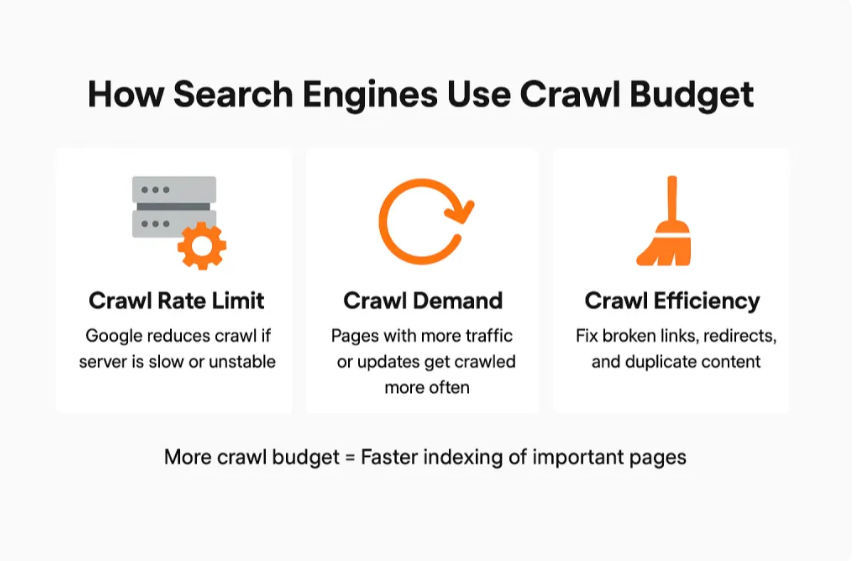

It’s not just a single number. Crawl budget consists of two main factors: crawl rate limit and crawl demand.

Crawl rate limit refers to the number of requests Googlebot can make to your site without overloading your server. If your site slows down or shows errors, Google will reduce its crawling frequency. If it performs well, Google may crawl more pages per day.

Crawl demand refers to the frequency with which Google chooses to crawl specific pages, based on their popularity and freshness. New pages, updated pages, and content with external links are crawled more frequently. Stale or orphaned pages might barely get crawled at all.

Put simply, crawl budget is a mix of how much your server can handle and how much interest Googlebot has in your pages.

What’s more, crawl budget isn’t static. It changes based on your site’s health, relevance, structure, overall optimization, and performance over time. If your site is fast, organized, and consistently updated, Google tends to crawl it more efficiently.

How Google Decides What to Crawl and When

Google doesn’t crawl everything on your site equally. It prioritizes what it believes users care about most, as well as what’s most useful or up to date.

Here’s what drives Google’s crawl behavior:

- Pages that are linked to often, both internally and externally

- Content that’s frequently updated

- Pages in your sitemap that are marked as important

- New URLs discovered during recent crawls

- Signals from search traffic or backlinks and link equity indicating value

At the same time, crawl efficiency gets hurt by:

- Broken links and redirect chains

- Duplicate pages caused by session IDs or filters

- Orphaned pages with no internal links

- Slow server response times

- Pages marked “noindex” that are still being crawled

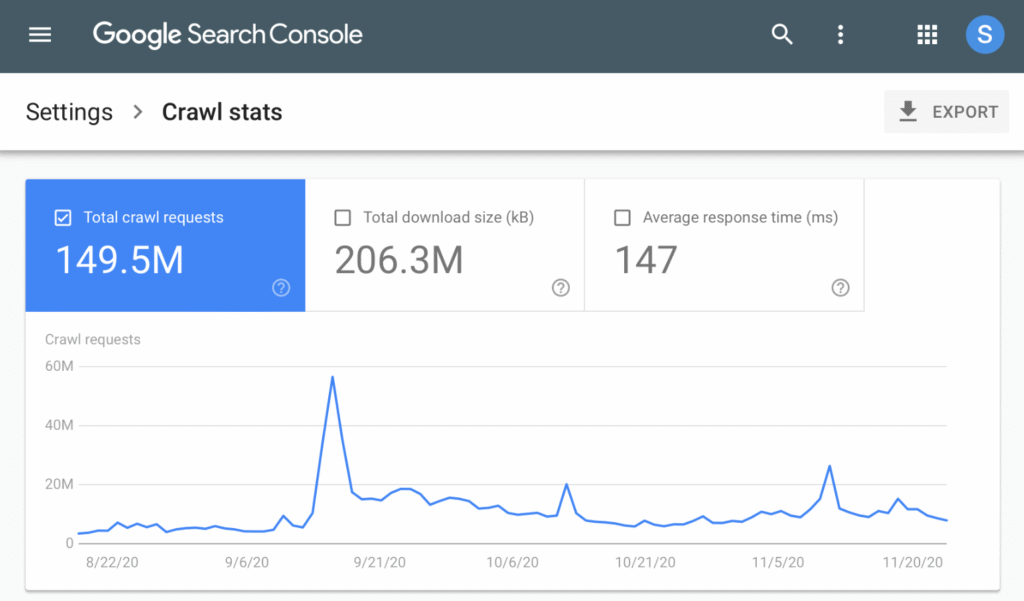

If you want to see how your site’s crawling is performing, Google Search Console’s Crawl Stats report is the place to look. You can view crawl frequency, crawl status codes, response times, and more.

Additionally, it’s essential to understand that simply because a page is crawled, it doesn’t mean it will be indexed. Google might crawl a page and still decide not to include it in search results if it determines the content to be thin, duplicate, or of low value.

Does Crawl Budget Really Matter for Small Sites?

In most situations, a crawl budget is just something small site owners don’t need to stress about. If your site has fewer than 1,000 pages, and it’s fast, well-structured, and not overrun with technical problems, Googlebot should be able to crawl it without breaking a sweat.

That said, there are some exceptions.

You might need to start paying attention if:

- Your site generates thousands of URLs due to filters, tags, or duplicate content.

- You run a blog or content site with a deep archive of old posts.

- Your eCommerce platform (like Shopify) creates dozens of parameter-based versions of each product.

- Your CMS or plugins accidentally publish low-value pages at scale.

The bigger your site gets, the more crawl budget becomes relevant. If Googlebot wastes time crawling junk URLs or thin content, it might miss your important new pages.

Here’s a quick checklist to see if crawl budget is something you need to think about:

- Is your site over 5,000 unique URLs?

- Do you have lots of pages with URL parameters or filters?

- Are you seeing “Discovered – currently not indexed” in Search Console?

- Are pages taking weeks to appear in Google after publishing?

- Is your internal linking structure weak or inconsistent?

If most of these are “no,” then you’re probably fine. But as your content footprint grows, crawl efficiency becomes more critical.

What Happens When Crawl Budget Becomes a Problem

Once the crawl budget gets stretched too thin, a few problems start to show up:

- New content doesn’t get crawled or indexed quickly. This slows down your SEO performance and keeps fresh pages from ranking.

- Important pages get skipped. If low-value URLs consume the crawl budget, Googlebot may not be able to reach your core pages.

- Duplicate or parameter pages flood your crawl. These can waste resources without providing real value.

- Thin content piles up. Even if Google crawls everything, it may not index pages that don’t meet quality thresholds.

- Crawl frequency drops over time. If your site starts to appear low-value, Googlebot may visit it less frequently.

All of this can lead to slower indexing, reduced visibility, and stagnant rankings. It’s not about penalties—it’s more about missed opportunities to get content discovered.

Simple Ways to Stay Crawl-Efficient

The good news is that crawl efficiency is relatively easy to improve. Here are some practical things you can do:

- Fix broken links and reduce redirect chains. A long redirect path wastes crawl time.

- Keep your internal linking strong. Make sure every critical page is accessible from at least one other page.

- Block unnecessary URLs in your robots.txt file. This includes filter pages, tag pages, or session-based URLs.

- Consolidate thin content. Instead of ten short blog posts, consider combining them into a single, more valuable piece.

- Update your sitemap regularly. Only include URLs that are indexable and important.

- Avoid bloated JavaScript. If your content relies on JavaScript to load, Google might struggle to crawl it.

- Use canonical tags to tell Google which version of a page is the primary one.

These changes don’t require deep technical knowledge. They’re about removing clutter and making it easy for Googlebot to focus on what matters.

Tools to Monitor Crawl Activity and Spot Issues Early

You don’t need a whole technical team to keep tabs on crawl behavior. A few tools go a long way:

- Google Search Console – Crawl Stats shows how often Googlebot visits your site, how long it takes to load pages, and whether any errors occurred.

- Log file analyzers (like Screaming Frog Log Analyzer) can give a clear view of which URLs Googlebot is hitting and how often.

- Screaming Frog lets you simulate a crawl to spot broken links, redirect loops, duplicate titles, and other structural problems.

- Sitebulb and JetOctopus offer more advanced crawl analysis, with visuals that help prioritize technical fixes.

What should you monitor regularly?

- Spikes in crawl errors or crawl delays

- Sharp drops in crawl frequency

- A high number of “Discovered – currently not indexed” URLs

- Duplicate content issues flagged in crawl tools

- New pages that aren’t getting crawled within a few days of publishing

Set a reminder to check crawl stats at least once a month, especially if your site is growing.

Crawl Budget Myths That Trip People Up

Let’s clear up a few common misconceptions:

- Myth: More crawling means better rankings.

Not true. Crawl frequency affects how quickly content appears in search results, but doesn’t impact its ranking. Rankings are based on content quality, authority, and relevance. - Myth: You can ask or pay Google to crawl your site more.

You can’t. Crawl budget is managed algorithmically. Google decides what to crawl based on your site’s performance and structure. - Myth: Small sites need weekly crawl budget audits.

Most small business websites don’t have crawl issues. Focus on creating high-quality content, effective linking, and a clear structure.

What improves crawl behavior over time? Publishing valuable content, building high-quality links, minimizing technical waste, and maintaining a well-structured site.

Keep Your Site Clean—Google Will Do the Rest

If your site is well-organized, loads quickly, and delivers valuable content, crawl budget isn’t something to worry about. Google will automatically find and index your pages with minimal intervention.

The real key is to avoid technical clutter that wastes time. Focus on your content strategy and internal structure first. That’s what builds authority and encourages consistent crawling.

For small sites, crawl budget is more of a concept than a daily concern. However, if your content grows rapidly or your platform introduces unnecessary complexity, it’s wise to monitor crawl behavior before it slows you down.

Ready to Build a Site That Google Wants to Crawl?

You don’t need to obsess over crawl budget — but you do need a site that’s clean, fast, and built to grow. At Content Author, we help businesses create SEO-first websites and content strategies that make indexing easy and rankings predictable.

Whether you’re launching a new site or scaling an existing one, we’ll help you:

- Identify and fix crawl and indexing issues

- Build a smart, scalable content strategy

- Design a site structure that works for users and search engines

Book your free strategy call today — and let’s make sure your site is ready to compete.